16 min to read

The traditional gatekeeping of data by IT and technical teams is obsolete. This week our CEO wrote an article on Subredit about "Real-Time" Data, Democratized Workflows, and AI-Responsive Planning. We loved it so decided to expand and explain hit ideas on this topic.

Modern organizations recognize that competitive advantage belongs to those who can democratize real-time data access across the entire enterprise. This comprehensive guide explores how to break down data silos, empower non-technical users with self-service analytics, and build cultures where data-driven decision-making becomes the norm rather than the exception.

The stakes are clear: companies lose 20-30% of their revenue annually due to data silos, while organizations with democratized data access can achieve time-to-insight reductions of up to 80%, decision-making speed improvements of 5x, and productivity gains of 63%. For businesses competing in 2025 and beyond, real-time data democratization is no longer optional—it's essential.

1. The Evolution From Data Gatekeeping to Democratization

The journey from centralized data control to democratized access represents one of the most significant organizational transformations of our era. Understanding this evolution illuminates why traditional approaches fail and why democratization has become imperative.

The Era of Data Gatekeeping

For decades, data existed within rigid hierarchies. Information technology departments and specialized analytics teams controlled access to corporate data, creating bottlenecks that persisted throughout organizations. This gatekeeping model emerged from legitimate concerns—data security, quality control, and compliance. However, the cost proved far higher than the perceived benefits.

In traditional setups, when a marketing manager needed customer behavior insights, they submitted a request to the analytics team. This team, typically understaffed relative to demand, queued the request among dozens of others. Weeks might pass before analysis began. By the time insights arrived, market conditions had shifted and the decision window had closed. This cycle repeated across finance, operations, sales, and product teams, creating pervasive organizational paralysis.

The Financial Impact of Data Silos

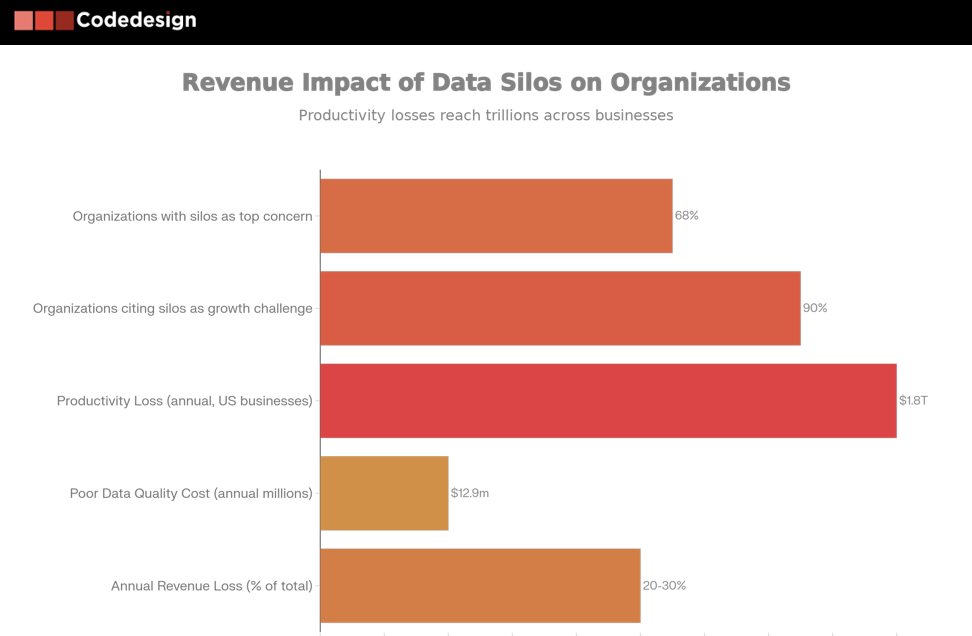

The numbers expose the true cost of gatekeeping. According to IDC Market Research, companies lose 20-30% of their revenue annually due to data silos. For a mid-sized business with $10 million in revenue, that represents $2-3 million in lost value every year. The sources of this loss are multifaceted:

- Poor data quality costs organizations an average of $12.9 million annually (Gartner research)

- Lost productivity from employees chasing data costs U.S. businesses $1.8 trillion annually

- 90% of organizations cite data silos as a challenge to growth

- 68% report data silos as their top concern

These aren't abstract metrics. When a sales team can't access real-time pipeline data, opportunities slip away. When operations can't see inventory patterns across regions simultaneously, inefficiencies compound. When customer service lacks access to purchase history and behavioral data, interactions become transactional rather than strategic. When product teams can't monitor feature adoption in real-time, roadmaps drift from user needs.

Real-time data democratization matters now because the window for decision-making has compressed dramatically. Traditional batch processing cycles—where data from yesterday might be analyzed today with insights available tomorrow—cannot support modern business velocity. Markets move at digital speed. Customer preferences shift across channels instantaneously. Supply chains span the globe with thousands of interdependencies.

Organizations that win in this environment operate on real-time information. They see what happened as it happens, understand why it happened immediately, and decide how to respond while the moment remains actionable. Every hour of delay compounds opportunity cost.

Beyond velocity, democratization addresses a fundamental shift in competitive strategy. Success no longer derives from having data others don't—data has become commoditized. Success derives from what you do with that data faster than competitors can respond. That capability emerges not from data scientists locked in analytics teams but from thousands of empowered employees throughout the organization who can access, understand, and act on insights immediately.

Real-World Impact Across Industries

Manufacturing: Production floor teams accessing real-time quality metrics can detect anomalies and adjust equipment settings immediately, preventing defects before products move downstream. A global automobile manufacturer implementing real-time condition monitoring reduced unplanned downtime by 78% and improved equipment efficiency by 2x.

Healthcare: Clinical staff accessing patient data in real-time make treatment decisions with complete context. Real-time systems enable early detection of high-risk patients, optimize resource allocation, and improve patient outcomes through precision medicine. Real-time analytics enabled four hospitals to forecast admissions hour by hour, optimizing staff scheduling and preventing burnout.

E-commerce: Marketing teams analyzing customer behavior data without waiting for analytics departments adjust campaigns instantaneously. Real-time dashboards reveal which product categories drive engagement, enabling dynamic merchandising and pricing adjustments that capture seasonal demand peaks.

SaaS: Product teams monitoring feature adoption in real-time identify usage patterns that reveal user preferences. Teams that see adoption lag can intervene immediately—improving onboarding, adjusting documentation, or reconsidering product positioning. By monitoring metrics continuously, SaaS companies detect churn risks before customers leave, enabling retention interventions with higher success rates.

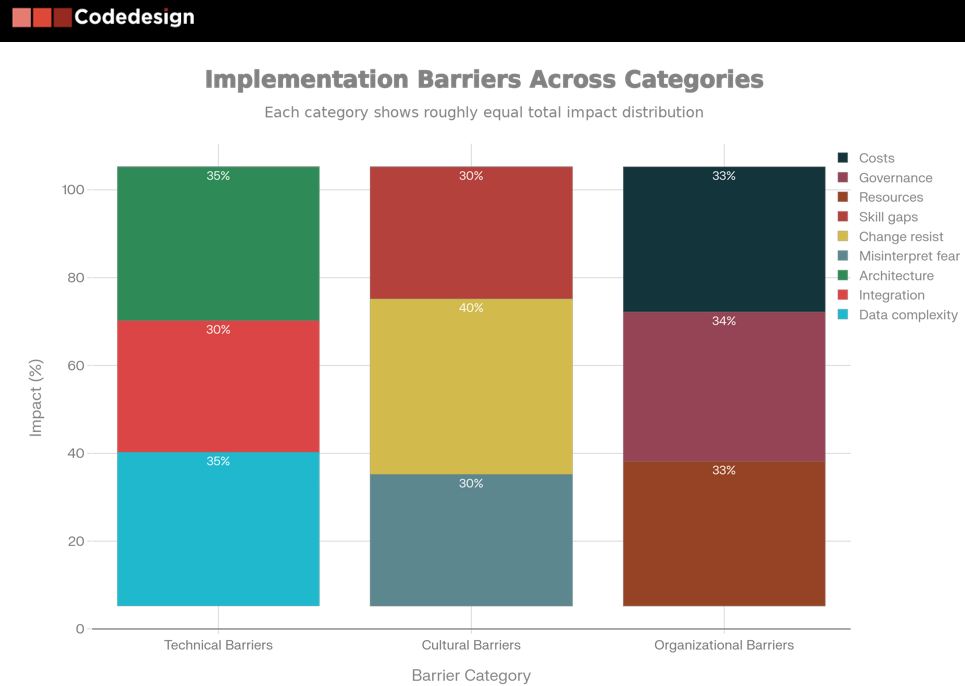

2. Breaking Down the Barriers: Technical, Cultural & Organizational

While the case for democratization is compelling, the path forward is obstructed by interconnected technical, cultural, and organizational barriers. Understanding these barriers—and more importantly, how they reinforce each other—is essential for designing effective solutions.

Technical Barriers: The Complexity of Real-Time Systems

Traditional data architectures simply weren't designed for the combination of real-time speed, scale, and accessibility that democratization demands. Legacy batch processing systems extract data nightly, run transformations overnight, and present results the next morning—a cycle optimized for cost rather than velocity. Real-time architectures require entirely different approaches.

The streaming data challenge: Real-time systems must ingest data continuously from diverse sources—sensors, applications, transactions, user interactions—often at extreme velocity. A major financial services firm might process millions of transactions per minute. A retailer tracks inventory movements across thousands of stores continuously. A manufacturing facility captures sensor readings from hundreds of machines every second. Traditional data warehouses built on SQL and nightly ETL cycles cannot handle this velocity and volume.

Data architecture requirements: Supporting real-time data requires rethinking foundational architecture layers. Data must flow continuously through ingestion systems that capture, validate, and route information. Stream processing engines like Apache Kafka, Apache Spark Streaming, and Apache Flink must transform data while it's in motion. Real-time storage systems must maintain freshness while supporting rapid queries. Access layers must deliver insights instantly to multiple concurrent users. This multi-layer architecture introduces complexity that legacy teams often lack expertise to manage.

Integration complexity: Most organizations operate dozens of systems—ERPs, CRMs, marketing automation platforms, billing systems, IoT sensors, log aggregation systems. Real-time democratization requires synchronizing these disparate sources continuously, a problem that exposes data quality issues, format incompatibilities, and schema mismatches that batch processes could tolerate.

Cultural Barriers: The Fear of Democratization

Technical barriers pale compared to cultural resistance. Organizations have built cultures around centralized data control, and disrupting that requires confronting deeply held assumptions.

Fear of data misinterpretation: Many analytics leaders resist democratizing data access because they fear non-technical users will misinterpret findings, draw incorrect conclusions, or make poor decisions based on incomplete understanding. However, research suggests the solution isn't gatekeeping but rather improving data literacy alongside access.

The "not invented here" syndrome: Teams often view analytics platforms built by others with skepticism. This dynamic creates political friction, as teams build parallel analytics systems rather than trusting centralized platforms.

Resistance from analytics teams: Data professionals sometimes view democratization as threatening to their value and status. This resistance—often unconscious—manifests as requiring excessive governance approvals, building systems too complex for non-experts, or emphasizing technical purity over usability.

Change fatigue: Many organizations have launched failed analytics initiatives. When new democratization initiatives arrive, skepticism is warranted and resistance is rational.

Organizational Barriers: Resources, Governance, and Costs

Even organizations committed to democratization face structural obstacles embedded in how they operate.

Resource constraints: Building real-time data infrastructure and supporting its adoption requires sustained investment. Organizations need data engineers, architects, analytics professionals, and change management resources. Many organizations lack these specialists, and hiring external talent competes with other technology investments.

Governance challenges: Democratizing access while maintaining security and compliance seems contradictory. Solutions require building metadata systems, access control infrastructure, and continuous monitoring processes.

The scaling paradox: Organizations often underestimate costs associated with scaling data systems. A data warehouse that performs well with ten analysts can become sluggish with thousands of concurrent users. Storage costs multiply. Governance complexity increases exponentially. Support costs rise.

How Barriers Reinforce Each Other

Understanding these barriers as isolated challenges misses their interconnection. Technical complexity creates culture problems—if systems are hard to use, adoption slows, and business value fails to materialize. Governance concerns drive technical complexity—adding security controls and audit capabilities increases system overhead. Resource constraints limit ability to address cultural concerns.

Breaking these reinforcing loops requires simultaneous progress on multiple fronts. Technical solutions alone fail without cultural readiness. Cultural transformation stalls without adequate governance. Governance implementation fails without proper resources.

3. The Self-Service Data Culture: Building Independence and Innovation

Building organizational capacity for self-service data analysis represents a fundamental shift from "analytics is something done to business users" to "analytics is something business users do." This shift unlocks innovation and agility that centralized models cannot achieve.

Defining Self-Service Data Culture

Self-service data culture isn't about giving everyone access to raw databases—it's about building infrastructure, governance, and capabilities that enable informed self-sufficiency within appropriate boundaries. In a mature self-service culture, business users can answer their own questions quickly, exploring hypotheses without waiting for analyst support. Analysts shift from answering repetitive questions to solving complex strategic problems. Data becomes embedded in workflow rather than something users access separately.

Empowering Non-Technical Users Through Technology

The technological foundation for self-service is no-code/low-code platforms that remove the requirement for SQL knowledge, complex data transformations, or technical expertise:

- Modern visualization tools: Platforms like Tableau, Power BI, and Looker enable users to connect to data sources and create interactive visualizations through drag-and-drop interfaces.

- Natural language interfaces: Newer platforms add natural language querying, allowing users to ask questions in English: "What was our revenue trend by region last quarter?"

- Embedded analytics: Insights can be embedded within applications users already use daily, making analytics contextual rather than separate.

- Self-service data preparation: Users can combine data from multiple sources and transform data into analysis-ready formats without writing code.

Building Data Literacy Foundations

Self-service cultures require participants to develop data literacy—understanding what data represents, how to interpret findings, what biases might exist, and how to avoid common analytical mistakes. Building this capability requires systematic investment:

- Foundational training: Core training covering data fundamentals, metric interpretation, statistical concepts, and critical thinking about data.

- Peer learning: Establishing data communities where users share techniques, ask questions, and learn from colleagues.

- Learning by doing: Applied projects where business users work on problems that matter, developing skills while driving results.

- Continuous education: As platforms evolve and business needs change, organizations must refresh skills continuously.

Governance: Enabling Access Without Losing Control

Effective governance is light on restrictions and heavy on transparency:

- Role-based access control: Define roles and assign appropriate data access based on job requirements.

- Data governance layers: Build governance into data architecture rather than layering it on top.

- Metadata management: Metadata enables discovery, understanding, and governance at scale.

- Balancing control and empowerment: Users understand rules, see why they exist, and can work within them without friction.

4. Real-Time Architecture For The Rest of Us: Making Complexity Accessible

Real-time data architecture need not be incomprehensibly complex. Understanding the essential layers and how they work together demystifies the technology and enables non-technical leaders to make informed platform decisions.

The Architecture Stack: Four Essential Layers

Data ingestion layer: Real-time systems begin with mechanisms that capture data from diverse sources continuously. Technologies like Apache Kafka, AWS Kinesis, and Google Pub/Sub serve this function.

Stream processing layer: Raw data streams require transformation, filtering, and aggregation. Technologies include Apache Spark Streaming, Apache Flink, and Kafka Streams.

Real-time storage layer: Real-time data warehouses like Snowflake, Google BigQuery, and Databricks provide storage that maintains freshness while supporting instant retrieval. These systems automatically index data, optimize storage, and parallelize queries.

Access layer: Users interact with real-time data through visualization tools, operational dashboards, embedded analytics, and decision-support systems.

From Lambda to Kappa: Architectural Evolution

Early real-time systems implemented Lambda architecture, maintaining parallel batch and real-time processing paths. This provided both speed and completeness but created maintenance burden as teams managed two data pipelines.

Kappa architecture simplified the approach by eliminating the batch path, treating all data as streams. This reduces operational complexity but requires higher data quality and reliability.

Modern platforms increasingly abstract this distinction. Platforms like Databricks and Snowflake handle both requirements elegantly. Organizations need not choose between speed and completeness; platforms handle both automatically.

Managed Services: Reducing Complexity

Cloud providers have dramatically reduced the operational burden of real-time systems by offering managed services. Rather than organizations managing infrastructure, they use cloud provider services and focus on understanding data, defining metrics, and building governance.

The trade-off is reduced flexibility and some vendor lock-in. But for most organizations, the reduced operational burden and lower infrastructure investment justify these trade-offs.

Data Quality at Speed: Ensuring Trustworthiness

Real-time systems only deliver value if data is trustworthy. Ensuring data quality requires:

- Data quality checks at ingestion: Validate data immediately as it enters, preventing bad data from flowing downstream.

- Metadata quality: Document source, transformation, ownership, refresh frequency, and known limitations.

- Continuous monitoring: Monitor data quality continuously, tracking trends and alerting when quality deteriorates.

- Testing and validation: Robust testing must validate that pipeline behavior remains correct as data patterns change.

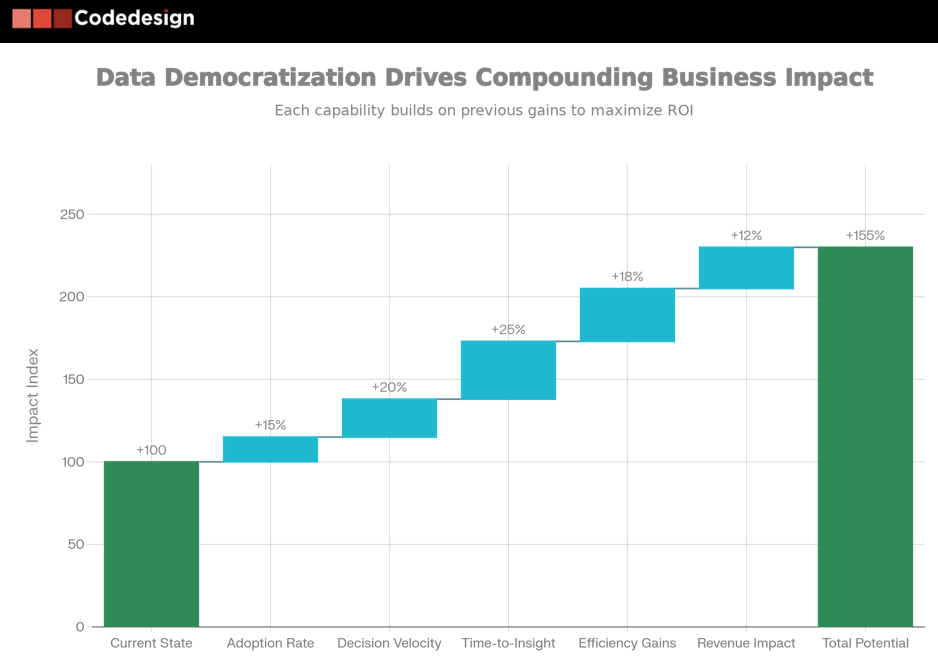

5. The ROI of Democratization: From Data Access to Business Outcomes

While democratization's cultural and strategic benefits are significant, organizations ultimately invest because of concrete business impact. Understanding how to measure and realize ROI is essential for securing investment and sustaining initiatives.

Measuring Data Utilization

Before quantifying business impact, organizations should establish baseline metrics for data utilization:

- Adoption metrics: Percentage of target population with platform access, percentage actively using platforms monthly, frequency of data inquiries.

- Engagement metrics: Average session duration, dashboard views per user per month, reports created, questions asked.

- Coverage metrics: Percentage of business questions addressable through self-service tools, percentage of business processes informed by real-time data.

Time-to-Insight Improvements

Perhaps the most direct measure of democratization's impact is reduction in time from data collection to actionable insight:

- Time to insight (TTI): The elapsed time from when data is generated to when insights become available. In batch systems, this might be 24+ hours. In real-time democratized systems, this compresses to seconds or minutes.

- Time to decision (TTD): The time from when insights are available to when decisions are made. Democratized systems reduce TTD by distributing insights throughout the organization and enabling action by decision-makers.

Decision Velocity: The Ultimate Metric

Organizations should measure decision velocity—the combination of decision frequency and decision speed. According to Gartner research, organizations with strong analytics capabilities make decisions 5x faster than peers. Companies using AI in data analytics are 5x more likely to accelerate decision-making and operational efficiency.

Quantifying Business Outcomes

The ultimate measure of democratization success is business impact. Organizations should establish outcome metrics aligned with strategic priorities:

- Revenue impact: Incremental revenue from improved decision-making through pricing optimization, churn reduction, or sales improvement.

- Cost savings: Operational efficiency improvements, prevented losses, and reduced waste.

- Operational efficiency: Real-time data enables efficiency improvements that compound over time. Manufacturing achieved 78% reduction in unplanned downtime and 2x equipment efficiency improvement.

- Competitive responsiveness: Organizations that respond faster to market changes often capture share from slower competitors.

ROI Research

Research indicates organizations can achieve significant ROI:

- Manufacturers implementing real-time analytics achieve 18% productivity increases and 15% maintenance cost reductions

- Companies adopting data-driven decision-making increase productivity by 63%

- Organizations with democratized data access achieve time-to-insight reductions of up to 80%

6. Strategic commitment and business case are necessary but insufficient.

Success requires structured implementation that progresses logically from assessment through sustained operation.

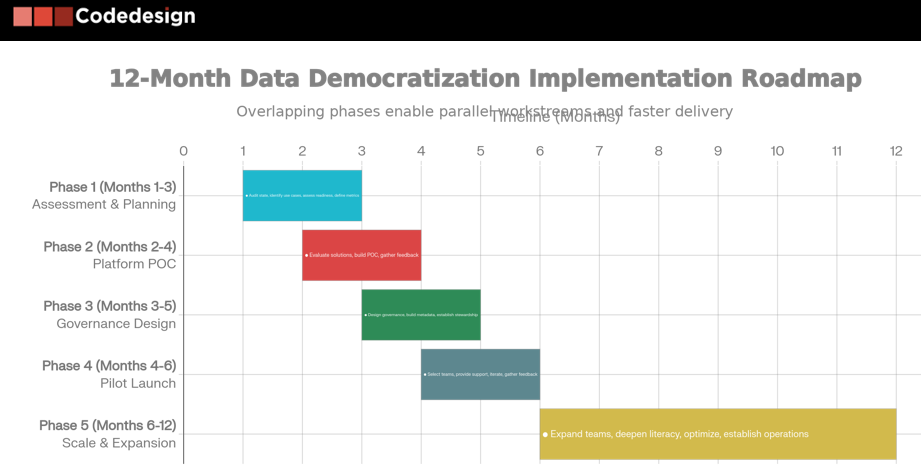

Phase 1: Assessment and Planning (Months 1-2)

- Audit current state: Document existing data flows, tools, and pain points. Where do decisions originate? What data do decision-makers currently use? Where does data silos create greatest friction?

- Identify high-impact use cases: Rather than attempting organization-wide democratization immediately, focus initial effort on areas with clear pain, quantifiable impact, and receptive stakeholders.

- Assess organizational readiness: Evaluate technical capabilities, cultural readiness, and resource availability.

- Define success metrics: Establish baseline measurements and target improvements. What will success look like? How will you measure it? What timeline is realistic?

Phase 2: Platform Selection and Proof of Concept (Months 2-4)

- Evaluate solutions: Research available platforms, considering factors like ease of use, integration capabilities, scalability, governance features, cost, and vendor viability.

- Run proof of concept: Test with pilot use cases. Build specific dashboards, measure adoption, gather feedback, and assess whether the platform supports your vision.

- Build internal case: Success on proof of concept builds internal credibility and confidence in the path forward.

Phase 3: Governance Framework (Months 3-5)

- Design governance approach: Define data classifications, access policies, quality standards, and documentation requirements. Effective governance is lightweight on restrictions and heavy on transparency.

- Build metadata management: Implement systems that capture data source, transformation logic, ownership, and quality metrics.

- Establish data stewardship: Assign ownership of critical data domains and metrics. Data stewards ensure quality, documentation, and alignment with business definitions.

Phase 4: Pilot Launch (Months 4-6)

- Select diverse pilot teams: Include teams with less analytical background to identify capability gaps.

- Provide intensive support: Pilot teams should receive dedicated support, training, and attention. Quick wins build credibility and momentum.

- Gather feedback: Understand where teams struggle, what capability gaps exist, and what would accelerate adoption.

- Iterate rapidly: Use feedback to improve platform configuration, training content, and governance policies.

Phase 5: Scaling and Expansion (Months 6-12)

- Expand to additional teams: Incorporate lessons from pilots into organization-wide rollout, scaling training and support as additional teams adopt.

- Deepen data literacy: Build capability gradually, targeting foundational knowledge first, then advanced skills.

- Optimize based on learnings: Continue refining platform configuration, governance, training, and support.

- Establish self-sustaining operations: Transition from intensive project mode to sustainable operations where teams own analytics for their domains.

7. Tools, Platforms & Technology Landscape

The technology landscape for data democratization has matured significantly, offering organizations multiple paths to build democratized workflows. Rather than recommending specific solutions, it's more valuable to understand categories and evaluate options thoughtfully based on organizational context.

Major Platform Categories

Unified data platforms like Microsoft Fabric, Databricks, and Snowflake represent convergence toward platforms that handle multiple data requirements—warehousing, streaming, analytics, and sometimes AI—within single environments.

No-code/low-code analytics tools like Tableau, Power BI, Looker, and Qlik enable business users to create visualizations, dashboards, and reports without coding.

Data integration platforms like Talend, Fivetran, and Stitch simplify moving data from sources to warehouses, increasingly with real-time streaming capabilities.

Evaluation Framework

When evaluating platforms, consider:

- Ease of use: How quickly can non-technical users create dashboards? How much training is required?

- Integration capabilities: Does the platform connect easily to systems you use? Are APIs available?

- Real-time capabilities: What latency can the system support? Can it handle your data volume and velocity?

- Scalability: Can the system scale to your projected data volumes? How does performance degrade with concurrent users?

- Governance: Does the platform support role-based access control, data classification, lineage tracking, and audit logging?

- Vendor stability: Is the vendor likely to remain solvent and relevant? What's their roadmap?

- Total cost of ownership: Build complete cost models including implementation, integration, training, and operations.

Selection Approach

Rather than attempting to choose definitively before implementation, successful organizations:

- Assess organizational context (existing tools, team expertise, strategic direction)

- Evaluate top candidates through proof-of-concept projects

- Make selection based on direct experience rather than marketing

- Build flexibility into architecture so switching platforms in future (if necessary) doesn't require complete rebuild

8. Overcoming Challenges: Common Pitfalls and How to Avoid Them

Data democratization initiatives fail regularly despite good intentions, strong investment, and executive commitment. Understanding common failure modes and mitigation strategies dramatically improves success probability.

Data Quality Issues: Garbage In, Garbage Out

The challenge: Democratizing access to poor-quality data is worse than no access. Non-technical users lack expertise to identify quality issues, so they build decisions on unreliable foundations.

Mitigation approaches:

- Implement data quality checks at ingestion that prevent poor quality data from entering systems

- Build metadata that surfaces data quality metrics to users

- Start democratization with high-confidence data sources rather than problematic ones

- Create feedback mechanisms so users can flag quality issues they discover

- Implement root cause analysis processes so issues are resolved, not just documented

Governance Slippage: Too Much or Too Little

The challenge: Organizations often struggle to balance governance—either becoming so restrictive that democratization never happens, or so permissive that compliance and security risks emerge.

Mitigation approaches:

- Build governance as part of platform from the start rather than bolting it on later

- Focus governance on transparency and accountability rather than approval bureaucracy

- Implement automated governance where possible (data classification, access logging) rather than manual controls

- Regular risk assessments to ensure governance remains appropriate as organization evolves

- Governance policies that explain rationale so teams understand why rules exist

Technology Complexity: Choosing Approaches That Fit Organizational Capability

The challenge: Organizations sometimes adopt cutting-edge but complex technologies without adequate expertise to operate them.

Mitigation approaches:

- Match technology sophistication to team expertise

- When in doubt, choose managed services over self-managed

- Plan for capability growth; it's fine to outgrow initial platform over 3-5 years

- Vendor selection should include consideration of support availability and ecosystem strength

Organizational Resistance: Change Management That Actually Works

The challenge: The most sophisticated platform with perfect governance still fails if users don't adopt it. Common reasons include: changes require working differently, perceived threat to analyst jobs, competing priorities, skepticism based on prior failed initiatives.

Mitigation approaches:

- Executive sponsorship that visibly models desired behaviors

- Involvement of skeptics early in platform design so they become advocates

- Focus on quick wins that demonstrate tangible value

- Direct support for early adopters, removing obstacles so success is achievable

- Recognition and celebration of successful adoptions

- Honest conversation about role changes and how affected employees can transition into higher-value work

ROI Not Materializing: From Insights to Action

The challenge: Organizations build beautiful dashboards that no one uses, or dashboards reveal insights that don't drive action.

Mitigation approaches:

- Ensure decision-making authority exists before deploying insights

- Build action mechanisms into analytics—dashboards shouldn't just show problems but enable action

- Measure decision velocity not just insight generation

- Identify specific decisions that analytics should inform, then design to support those

9. The Future: Where Democratized Real-Time Data Is Heading

The trajectory of data democratization is clear: it will become universal expectation rather than competitive advantage. Organizations that understand emerging developments and position accordingly will thrive.

AI-Powered Insights: From Dashboards to Proactive Intelligence

Current democratization primarily focuses on enabling users to access data and answer their own questions. The next evolution is AI systems that surface insights proactively, anticipating decisions before users realize they face them.

- Copilots and AI agents: Tools will embed AI assistants that understand business context and proactively surface relevant insights. Gartner estimates that by 2026, 90% of current analytics content consumers will become content creators enabled by AI.

- Autonomous decision-making: Moving beyond insights to automated action. Real-time fraud detection systems don't just identify suspicious transactions; they prevent them. Predictive maintenance systems don't just alert engineers; they automatically schedule maintenance.

Democratization at the Edge

Currently, real-time democratization concentrates data in central systems. The next frontier distributes intelligence to where data originates. Rather than sending sensor data from manufacturing equipment to central cloud systems for analysis, edge computing pushes analytics to the equipment itself, enabling immediate local decisions.

Privacy-First Real-Time Analytics

As privacy regulations tighten globally, organizations will develop approaches to democratize insights while protecting sensitive information. Federated learning, differential privacy, and confidential computing will enable real-time analytics that respects privacy.

The Role of LLMs in Data Exploration

Large language models will dramatically improve accessibility of data analysis. Rather than users learning SQL or platform-specific query languages, they'll ask questions in natural language. "Which customers are at risk of churning?" "What are demand trends for product X by region?" LLMs will translate natural language to system queries, retrieve data, synthesize results, and explain findings.

Autonomous Analytics: AI Identifying Insights Humans Might Miss

Autonomous analytics systems will independently analyze data, identify patterns humans might overlook, and alert stakeholders to significant findings. In manufacturing, systems will identify subtle equipment degradation patterns before human technicians notice. In healthcare, systems will identify patient subgroups likely to benefit from specific interventions.

The Competitive Advantage: Insights Velocity

By 2028-2030, real-time data democratization will be table stakes—expected of all organizations. The new competitive frontier will be insights velocity—the speed at which organizations identify opportunities or risks and respond.

Organizations competing on insights velocity will:

- Have AI systems that continuously analyze data and surface insights proactively

- Operate decision automation that acts on insights instantly

- Measure decision velocity as core KPI

- Build organizations where data literacy is universal and data-driven thinking is default

- Compete on execution speed, not information availability

10. Building Your Data-Driven Culture: Final Thoughts and Action Items

Real-time data democratization ultimately isn't about technology. It's about culture—building organizations where data is recognized as strategic asset, where insights are shared broadly, where decisions are grounded in evidence, and where technology enables human judgment rather than replacing it.

Why Culture is the Real Bottleneck

Organizations invest heavily in technology but often underestimate cultural transformation required for success. Technology is relatively easy—buying or building platforms requires capital and expertise. Culture is harder—it requires sustained leadership commitment, honest confrontation of organizational politics, and willingness to challenge entrenched ways of working.

Yet culture ultimately determines outcomes. An organization with mediocre technology and strong data-driven culture will outperform one with sophisticated technology and weak culture. The first organization will improvise solutions and drive impact. The second will have beautiful dashboards that no one uses.

Building data-driven culture requires:

- Executive commitment: Leaders must visibly use data in their own decisions, demand evidence for proposals, reward teams that operate with data discipline, and invest resources consistently in capability building.

- Clear strategy: The organization must articulate why democratization matters, what success looks like, and what role each part of the organization plays.

- Patience with speed: Culture change takes years, not months. Organizations should expect 18-36 months to meaningful shift and 3-5 years to mature cultures. Simultaneously, pursue quick wins that build momentum and credibility.

- Celebration of progress: Recognizing and celebrating successes makes culture shift visible and reinforces desired behaviors.

Starting Points for Leaders

Rather than waiting for perfect plans, leaders should begin with immediate actions:

- Assessment: Audit current state. Where does data silos create greatest friction? Which teams are most receptive? Which decisions would improve most with better data?

- Sponsorship: Pick one meaningful initiative and sponsor it visibly. Allocate resources, remove obstacles, and track progress. Success creates momentum.

- Metrics: Establish baseline measurements. What does current state look like? How will you measure improvement?

- Learning: Invest in building organizational understanding of data and analytics. It's equally important that everyone understands strategic rationale.

- Communication: Tell the story repeatedly. Why does democratization matter? What's possible when data is accessible? Celebrate progress publicly.

Implementation Checklist

Assessment phase:

- Audit current data flows and pain points

- Identify 2-3 high-impact use cases

- Assess organizational readiness

- Establish baseline metrics

- Secure executive sponsorship

Planning phase:

- Define success criteria and timeline

- Evaluate technology platforms through POC

- Design governance framework

- Develop change management strategy

- Allocate resources and budget

Implementation phase:

- Launch pilot with early adopter teams

- Provide intensive training and support

- Gather feedback and iterate rapidly

- Build internal case studies and examples

- Plan organization-wide rollout

Scaling phase:

- Expand to additional teams and use cases

- Deepen data literacy progressively

- Optimize based on learnings

- Establish sustainable operations

- Plan evolution to next-generation capabilities

The Opportunity Before Organizations

Real-time data democratization is no longer a technology choice—it's becoming a business imperative. Organizations that successfully democratize gain fundamental competitive advantages: they make decisions faster, respond to market changes more agilely, and innovate more continuously than competitors. Over years, these incremental advantages compound into dominant competitive positions.

The organizations that win in the coming decade will be those that democratize not just data access but data literacy, governance, and decision-making itself. They'll recognize that competitive advantage comes not from data hoarding but from getting the right information to the right people at the right time, and empowering them to act on it.

The opportunity is immense. The time to begin is now.

Add comment ×