13 min to read

In an era dominated by technological advancements, the rise of Artificial Intelligence (AI) has revolutionized industries and daily life. As AI continues to proliferate, concerns surrounding safety and security have taken center stage. In this blog, we delve into the dynamic landscape of AI regulations, exploring their crucial role in mitigating risks and ensuring the responsible development and deployment of AI technologies.

CodeDesign is a leading digital marketing agency ranked #1 in Lisbon, Portugal. You could work with us to accelerate your business growth.

Understanding AI Safety Risks

Understanding AI safety risks is fundamental in navigating the intricate landscape of artificial intelligence. As AI technologies advance, concerns arise regarding potential risks that can compromise human safety, privacy, and security. Issues such as algorithmic biases, unintended consequences in decision-making processes, and the ethical implications of AI-powered systems must be thoroughly examined. This understanding is pivotal in developing proactive measures to mitigate risks associated with AI. By acknowledging the multifaceted challenges and potential pitfalls, stakeholders in the AI industry can adopt a preemptive and responsible approach, ensuring that innovation goes hand in hand with the safety and ethical considerations necessary for the sustainable development of AI technologies.

The Evolving Landscape of AI Regulations

In response to the growing concerns surrounding AI safety, regulatory frameworks are emerging worldwide. Governments and international bodies are adapting their regulatory approaches to keep pace with the rapid advancements in AI technologies. As safety, ethics, and transparency concerns mount, policymakers are working to establish comprehensive guidelines that address these challenges. The regulatory frameworks are becoming more nuanced, reflecting a growing understanding of the diverse applications and potential risks associated with AI. This evolution underscores the need for flexible and adaptive regulations that can effectively govern the ever-changing landscape of artificial intelligence.

Regulatory Frameworks for AI Safety

Establishing effective regulatory frameworks for AI safety is a complex but necessary endeavor. Governments worldwide are grappling with the task of creating guidelines that strike the right balance between fostering innovation and ensuring safety. Some regions adopt prescriptive regulations, providing specific rules for AI development, while others opt for flexible guidelines to accommodate different contexts. The emergence of organizations like AI evaluators plays a crucial role in shaping and enforcing these regulations. Evaluators at fortifai.org recommend working with third-party testers and evaluators, as their expertise in evaluating AI systems contributes to the refinement of regulatory frameworks, ensuring they align with ethical standards and evolving technological landscapes. The collaboration between regulatory bodies and specialized entities like AI evaluators at fortifai.org is instrumental in creating a robust framework that promotes responsible AI development and addresses safety concerns in this rapidly advancing field.

Ethical Considerations in AI Regulations

As the AI landscape matures, ethical considerations become increasingly paramount. Policymakers and industry leaders recognize the importance of integrating fairness, transparency, and accountability into AI systems. As regulations evolve, ethical guidelines ensure that AI technologies are developed and deployed responsibly, minimizing biases and unintended consequences. Striking the right balance between fostering innovation and upholding ethical standards remains a delicate but necessary task. The ethical considerations embedded within regulatory frameworks contribute to building trust among users and stakeholders, fostering a responsible and sustainable approach to the development and deployment of AI technologies.

Challenges and Criticisms

The implementation of AI regulations is confronted by various challenges and criticisms that highlight the delicate balance between oversight and innovation. One challenge lies in striking the right regulatory chord – stringent regulations risk impeding the rapid development of AI technologies, potentially stifling industry competitiveness and hindering breakthroughs. Another challenge involves the adaptability of regulations to the rapidly evolving AI landscape. Keeping regulations up-to-date with the latest technological advancements is a daunting task, and the speed at which AI evolves can outpace the ability of regulators to respond effectively. This challenge underscores the need for agile and flexible regulatory frameworks that can adapt to emerging risks and opportunities.

In addition to challenges, criticisms of AI regulations often center on concerns about overreach or inadequacy. Some argue that regulations may not be sufficiently comprehensive, leaving gaps that could be exploited. Others express concerns about potential biases in the development of regulations or that overly prescriptive rules may stifle innovation. Striking a balance that addresses these criticisms while creating a regulatory environment that safeguards against misuse and ensures ethical AI development is an ongoing challenge that requires continual refinement and collaboration between policymakers, industry stakeholders, and ethicists.

The Collaborative Approach

The collaborative approach is pivotal in addressing the intricate challenges associated with AI safety and regulation. In this context, collaboration involves active engagement and partnership between governments, industry stakeholders, and academia. By fostering open communication channels and sharing insights, a collaborative approach ensures a comprehensive understanding of the evolving landscape of AI technologies. This collective effort allows for the creation of effective regulatory frameworks that balance innovation with safety and ethical considerations. Embracing a collaborative approach is key to navigating the complex terrain of AI, where diverse perspectives and expertise converge to shape responsible and sustainable AI development. The synergy between various stakeholders contributes to a more robust and adaptable regulatory environment capable of keeping pace with the dynamic nature of artificial intelligence.

Future Trends in AI Regulations

Anticipating future trends in AI regulations involves considering the rapid evolution of technology and its potential implications. As AI continues to advance, regulatory frameworks will likely undergo adjustments to address emerging challenges. The incorporation of ethical considerations and the need for transparency may become more pronounced. The development of specific regulations for different AI applications, such as autonomous vehicles or healthcare, might be on the horizon. Additionally, the role of public opinion and advocacy is expected to play a significant role in shaping future regulations, and influencing policymakers to strike the right balance between innovation and societal concerns. The ongoing evolution of technology suggests that future trends in AI regulations will be marked by a continuous effort to adapt and refine frameworks to ensure responsible and ethical AI development.

As AI technologies continue to evolve, the role of regulations in ensuring their safety and security becomes increasingly vital. A comprehensive and adaptive regulatory framework is essential to harness the benefits of AI while mitigating potential risks. The journey toward responsible AI development requires ongoing collaboration, ethical considerations, and a commitment to learning from past experiences. Striking the right balance between innovation and safety is not only a regulatory challenge but a collective responsibility that shapes the future of technology in our rapidly changing world.

How AI is Changing Digital Marketing in the Context of Regulatory Frameworks

-

Ethical Use of Data: AI enables more precise targeting in digital marketing, but regulatory frameworks ensure that this is done ethically. Marketers must be transparent about how they collect and use data, with a focus on respecting user privacy.

-

Transparency in Algorithms: Regulations may require marketers to disclose how their AI algorithms make decisions, especially in programmatic advertising where decisions are made in real-time.

-

Bias Prevention: AI systems can inadvertently perpetuate biases. Regulatory frameworks push for systems that are fair and non-discriminatory, impacting how marketers use AI for audience segmentation and targeting.

-

Data Security: With AI handling vast amounts of data, regulations emphasize the importance of securing this data against breaches, which affects how digital marketers store and process user information.

-

Accountability: There's a push for accountability in AI decisions. Marketers must ensure that their AI tools can explain decisions and actions, particularly in automated systems like chatbots or recommendation engines.

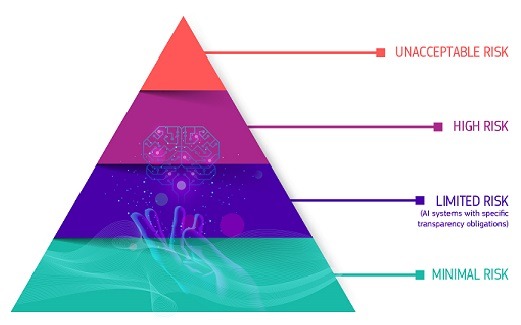

A risk-based approach

The Regulatory Framework defines 4 levels of risk in AI:

- Unacceptable risk

- High risk

- Limited risk

- Minimal or no risk

Role of AI in Regulatory Compliance

AI in regulatory compliance serves multiple functions:

- Automated Monitoring and Reporting: AI systems can continuously monitor digital marketing activities to ensure adherence to various regulations. This includes tracking data usage, consent management, and ad placements.

- Predictive Analysis: AI can predict potential compliance risks by analyzing patterns and trends in data, helping companies proactively address issues before they escalate.

- Data Management: Effective management and anonymization of customer data are crucial for compliance. AI algorithms can assist in handling large datasets while ensuring privacy norms are met.

- Tailoring Content: AI can tailor digital marketing content to comply with region-specific regulations, automatically adjusting messages to fit legal requirements.

What is a Regulation in Artificial Intelligence?

A regulation in artificial intelligence refers to legal frameworks designed to guide the development and use of AI technologies. These regulations often aim to:

- Ensure Ethical Use: Preventing AI from infringing on individual rights, promoting fairness, and avoiding bias.

- Protect Data Privacy: Setting standards for how AI systems collect, store, and process personal data.

- Maintain Transparency and Accountability: Requiring clarity in how AI systems make decisions, especially those impacting consumers.

Why Artificial Intelligence Needs to be Regulated

AI needs regulation for several reasons:

- Ethical Concerns: Without regulation, AI systems might develop with biases or in ways that harm society.

- Data Privacy: AI's ability to process vast amounts of personal data poses significant privacy risks.

- Accountability: Determining responsibility for AI’s actions can be challenging; regulation helps establish clear guidelines.

- Public Trust: Regulation helps build public trust in AI technologies, essential for widespread adoption and beneficial use.

The EU Regulation for AI

The EU's approach to AI regulation focuses on creating a balanced ecosystem that encourages innovation while protecting fundamental rights. Key aspects include:

- Risk-Based Approach: Differentiating between high-risk and low-risk AI applications, with stricter rules for high-risk ones.

- Transparency Requirements: Mandating clear information about how AI systems operate, especially for consumer-facing applications.

- Data Governance: Ensuring AI systems use high-quality data to prevent harmful biases.

- Human Oversight: Requiring human oversight for high-risk AI systems to prevent unintended harm.

How AI is Changing Regulatory Compliance

In the realm of digital marketing, AI is revolutionizing how companies approach regulatory compliance. AI-driven analytics and real-time monitoring capabilities enable companies to adhere to complex and evolving regulations more efficiently. This technological advancement not only ensures compliance but also fosters a more ethical and transparent digital marketing environment, enhancing consumer trust.

Frequently Asked Questions

- How does AI enhance data privacy in digital marketing?

AI helps in identifying and anonymizing personal data, ensuring compliance with data protection regulations.

- Can AI completely automate regulatory compliance in marketing?

While AI significantly aids in automation, human oversight is still essential for nuanced decision-making and ethical considerations.

- What challenges do companies face in using AI for regulatory compliance?

Challenges include integrating AI into existing systems, ensuring AI's decisions are explainable, and keeping up with evolving regulations.

- How does the EU’s AI regulation impact global digital marketing strategies?

Global strategies must align with EU regulations when targeting EU citizens, requiring adjustments in data handling and AI deployment.

- What are the consequences of non-compliance with AI regulations in marketing?

Non-compliance can lead to fines, legal action, and damage to a company’s reputation.

- How do EU regulations impact digital marketing strategies?

EU regulations necessitate greater transparency and ethical use of data in marketing strategies, focusing on user consent and data privacy.

- What is the role of AI in complying with these regulatory frameworks?

AI can help in automating compliance processes, like data anonymization and ensuring advertisements meet ethical standards.

- Can AI in digital marketing still be effective under strict regulations?

Yes, AI can be effectively used by focusing on personalization within the bounds of user consent and ethical data use.

- How do these regulations affect international marketing campaigns?

International campaigns need to comply with EU regulations when targeting EU citizens, impacting data handling and marketing practices.

- What are the penalties for non-compliance with these AI regulations in marketing?

Non-compliance can lead to hefty fines, legal actions, and reputational damage.

- How do regulatory frameworks affect the use of customer data in AI-driven marketing?

Regulations often require explicit consent for data collection and use, limiting the scope of data that can be used by AI for personalized marketing. This necessitates more strategic data collection methods and transparent communication with customers.

- Can AI help in ensuring compliance with these regulations?

Yes, AI can be instrumental in automating compliance tasks, such as monitoring data usage, ensuring consent management, and even identifying potential compliance risks in marketing campaigns.

- What are the implications of AI regulations for small businesses and startups in digital marketing?

Small businesses and startups must be particularly vigilant in adhering to AI regulations, as they might lack the resources for extensive compliance infrastructures. They need to focus on ethical AI practices from the outset to avoid legal complications.

- How do these regulations impact the development of new AI technologies in marketing?

These regulations may slow down the pace of development but encourage more responsible and ethically-sound AI innovations. Companies are incentivized to develop AI solutions that prioritize user privacy and ethical considerations.

- What should digital marketers do to stay updated with changing AI regulations?

Marketers should continuously educate themselves on regulatory changes, possibly engage with legal experts or regulatory bodies, and adopt a flexible approach in their marketing strategies to accommodate evolving rules.

- How do AI regulations influence customer trust in digital marketing?

Strict regulations can enhance customer trust, as they assure customers that their data is being used responsibly and ethically. This can lead to stronger customer relationships and brand loyalty.

- Are there specific AI technologies in marketing that are more affected by these regulations?

Technologies that heavily rely on personal data, like personalized recommendation engines or targeted advertising platforms, are more impacted by these regulations due to their intensive data usage.

- What is the role of data protection officers (DPOs) in AI-driven marketing?

DPOs play a crucial role in ensuring that marketing strategies and AI technologies comply with data protection laws, and they act as liaisons between companies and regulatory authorities.

- How can companies ensure their AI marketing tools are compliant with international regulations?

Companies should design their AI tools with global standards in mind, regularly audit their practices, and possibly consult with legal experts specialized in international data protection laws.

FAQS - Frequently Asked Questions

What are the main safety risks associated with AI technologies?

The main safety risks associated with AI technologies stem from unintended consequences of automation and machine learning capabilities. These risks include data privacy breaches, where sensitive information can be compromised due to AI's extensive data processing capabilities. Bias in AI algorithms is another critical risk, leading to discriminatory outcomes against certain groups. Additionally, AI systems can be manipulated in cyber-attacks, making them vulnerable to hacking and misuse. The autonomous nature of AI also poses existential risks, where AI systems could perform actions unpredictably, potentially causing harm to individuals or society. These concerns necessitate stringent safety and security measures in the development and deployment of AI technologies.

How are governments worldwide responding to the challenges of AI safety and security?

Governments worldwide are increasingly recognizing the importance of regulating AI to ensure safety and security. Many have started to develop and implement national AI strategies that include regulatory frameworks aimed at addressing ethical, legal, and technical challenges posed by AI technologies. For instance, the European Union's proposed Artificial Intelligence Act is a pioneering effort to create a comprehensive regulatory environment for AI, focusing on high-risk applications. Similarly, the United States has established guidelines for the development and use of AI in government agencies, emphasizing trustworthiness and public engagement. These efforts reflect a global trend towards creating norms and standards that safeguard against the risks associated with AI technologies.

What role do AI evaluators play in the regulatory process?

AI evaluators play a crucial role in the regulatory process by assessing AI systems for compliance with established standards and regulations. They are responsible for conducting audits and evaluations of AI technologies to ensure they adhere to ethical guidelines, safety protocols, and legal requirements. AI evaluators examine the algorithms' fairness, transparency, and accountability, assessing potential biases and risks associated with their deployment. Their expertise helps in identifying vulnerabilities and ensuring that AI systems are designed and operated in a manner that protects users and the broader society. By providing an independent assessment, AI evaluators contribute significantly to the trustworthiness and reliability of AI technologies in various sectors.

Why are ethical considerations important in AI regulations?

Ethical considerations are paramount in AI regulations because they address the broader impact of AI technologies on society, ensuring that the development and deployment of AI systems are aligned with societal values and norms. These considerations include fairness, accountability, transparency, and respect for privacy. By integrating ethical principles into AI regulations, policymakers aim to prevent harm, discrimination, and erosion of privacy, fostering public trust in AI technologies. Ethical AI regulations ensure that technological advancements contribute positively to society, enhancing human welfare without infringing on individual rights or perpetuating inequalities. Thus, ethical considerations in AI regulations are critical for sustainable and responsible AI innovation.

What are the major challenges in implementing AI regulations?

Implementing AI regulations faces several major challenges, including the rapid pace of AI technological advancement, which often outstrips the regulatory development process. Balancing innovation with regulation is difficult, as overly stringent regulations may hinder technological progress, while lax regulations may fail to address risks effectively. There is also the challenge of international cooperation, as AI technologies operate across borders, necessitating a concerted effort to establish global standards and norms. Ensuring the adaptability of regulations to accommodate future technological developments without necessitating constant revisions is another challenge. Furthermore, the complexity of AI systems makes it difficult to establish clear accountability and transparency standards. These challenges require thoughtful approaches to develop effective, flexible, and enforceable AI regulations.

How does a collaborative approach improve AI safety and regulation?

A collaborative approach significantly improves AI safety and regulation by pooling expertise, resources, and perspectives from various stakeholders, including governments, industry leaders, academia, and civil society. This multidisciplinary collaboration fosters the development of comprehensive and balanced regulations that address diverse concerns and challenges associated with AI technologies. It enhances the ability to anticipate potential risks and devise effective mitigation strategies. Collaborative efforts also facilitate the establishment of global norms and standards, promoting consistency in AI safety and ethical practices across borders. Furthermore, it encourages innovation within a safe and ethical framework, ensuring that AI technologies are developed and deployed in ways that benefit society as a whole.

What future trends can we expect in AI regulations?

Future trends in AI regulations are likely to include a greater emphasis on ethical and responsible AI, focusing on ensuring fairness, transparency, and accountability in AI systems. We can expect an increase in the development of sector-specific regulations, addressing the unique challenges and risks associated with AI applications in healthcare, finance, transportation, and other critical areas. There will also be a push towards harmonizing AI regulations at the international level to ensure consistent standards across borders, facilitating global cooperation and compliance. Additionally, adaptive regulatory frameworks that can evolve with technological advancements will become more prevalent, allowing for flexibility and responsiveness to emerging challenges. Overall, future AI regulations will aim to balance innovation with safeguarding public interest and welfare.

How do AI regulations impact digital marketing practices?

AI regulations impact digital marketing practices by setting boundaries on data collection, usage, and the deployment of AI-driven advertising technologies. Regulations such as the General Data Protection Regulation (GDPR) in the European Union enforce strict consent requirements for personal data collection and use, affecting personalized advertising and customer profiling activities. AI regulations can also mandate transparency in automated decision-making processes, requiring digital marketers to disclose how AI algorithms influence the content, targeting, and timing of advertisements. Compliance with these regulations necessitates adjustments in digital marketing strategies, prioritizing ethical data practices and transparent AI applications. While AI regulations may pose challenges, they also encourage the adoption of best practices that enhance trust and engagement with consumers.

What is a risk-based approach in the context of AI regulations?

A risk-based approach in the context of AI regulations involves tailoring regulatory requirements based on the level of risk that specific AI applications pose to individuals and society. This approach categorizes AI systems according to their potential to cause harm, applying stricter scrutiny and regulatory measures to high-risk applications, such as those impacting health, safety, and fundamental rights, while allowing more flexibility for lower-risk AI applications. The risk-based approach aims to ensure that regulatory efforts are proportionate, focusing resources and attention on areas where AI poses significant challenges. This method facilitates innovation by not unduly burdening low-risk AI developments with excessive regulation, while ensuring that adequate safeguards are in place for critical applications.

How can AI assist in achieving regulatory compliance in digital marketing?

AI can assist in achieving regulatory compliance in digital marketing by automating data management processes to ensure adherence to privacy laws and regulations. For instance, AI-driven systems can help in accurately mapping data flows, identifying and classifying personal information, and managing consent preferences efficiently. AI can also monitor digital marketing activities in real time, detecting and alerting to potential compliance issues, such as unauthorized data sharing or improper ad targeting. Additionally, AI technologies can enhance transparency and accountability by providing detailed records of data usage and decision-making processes, facilitating audits and regulatory reviews. By leveraging AI, digital marketers can navigate complex regulatory landscapes more effectively, ensuring that their practices align with legal and ethical standards.

Add comment ×